Understanding Scalability in Distributed Systems

This edition of The Balanced Engineer Newsletter dives into scalability in distributed systems, covering challenges for reaching scalability and common approaches!

March Theme: Distributed Systems

This month we are discussing distributed systems, and this week we are focusing on scalability-a key reason why folks tend to chase distributed architectures.

What is scalability?

Scalability is a system’s ability to handle increasing workloads gracefully. Scalable systems can accommodate growth, whether that’s more users, more data, or more transactions.

There are two primary approaches for scaling:

Vertical Scaling (Scaling Up): Adding more power to your existing machines, like upgrading with faster CPUs, more RAM, or larger storage.

Horizontal Scaling (Scaling Out): Adding more machines to your system, typically by distributing your workload across multiple servers.

The pillars of scalable distributed systems

1. Statelessness

Stateless components don't retain information about previous interactions. This makes horizontal scaling much simpler—any server can handle any request regardless of which server handled previous requests from the same user.

For example, a stateful system might have servers remember a user's shopping cart. This could be problematic if a different server responds to a user's requests and isn't aware of the shopping cart contents.

A stateless system (which is better for scaling) might store the user's shopping cart contents in browser storage, so that any server could retrieve and update it.

2. Partitioning/Sharding

Partitioning (also called sharding) allows you to break your data or workload into smaller, more manageable pieces by distributing them across multiple resources.

For example, a database might have multiple servers, where server one holds Users A-G, server two holds Users H-N, and server 3 holds Users O-Z

There are a few different strategies for partitioning, such as:

Range-based: e.g., users A-M on one server, N-Z on another

Hash-based: using a hash function to determine placement

Directory-based: using a lookup service to track where data lives

3. Replication

Replication involves creating copies of your data across multiple locations, which improves both reliability and read scalability, by spreading out the number of resources reading from that data at any given time.

There are a few different strategies for replication, such as:

Leader-follower: One server is the primary writer; others are read-only copies

Multi-leader: Multiple servers can accept writes

Leaderless: Any replica can accept reads and writes

4. Load Balancing

Load balancing involves distributing incoming traffic across multiple servers, which ensures no single server becomes a bottleneck.

Modern load balancers use sophisticated algorithms to route traffic effectively, such as:

Round-robin

Least connections

Resource-based (CPU/memory usage)

Geo-proximity

5. Caching

Caching stores frequently accessed data in memory to reduce the load on your primary data stores.

There are multiple different caching options, such as:

Browser caches

CDN caches

Application caches (like Redis)

Database caches

Scalability challenges

1. Consistency vs. Availability

The CAP theorem tells us we can only have two out of three: Consistency, Availability, and Partition tolerance. In distributed systems, partition tolerance is non-negotiable, so we must prioritize either consistency or availability.

2. Database Scaling

Databases often become bottlenecks as systems grow. Solutions include:

Read replicas for read-heavy workloads

Partition/sharding for write-heavy workloads

NoSQL databases (like DynamoDB or CosmosDB) for specific use cases

3. Service Interdependencies

As systems grow, services become increasingly dependent on each other. There are a few strategies to manage these dependencies, such as:

Circuit breakers to prevent cascading failures

Asynchronous communication where possible

Clear service boundaries and contracts

Measuring scalability

How do you know if your system is truly scalable? Look into quantifying or finding the following things:

Response time under load: How does performance change as traffic increases?

Resource utilization: How efficiently are you using your infrastructure?

Cost efficiency: How does cost grow with user growth?

Breaking points: Where and how does the system fail under extreme load?

The Deep Dive

One of the best distributed systems books that I come back to time and time again is Designing Data-Intensive Applications by Martin Kleppman. It does an excellent job explaining many of the strategies I discussed in much more detail, if you're looking to really dive deep!

Thank you!

If you made it this far, then thank you!

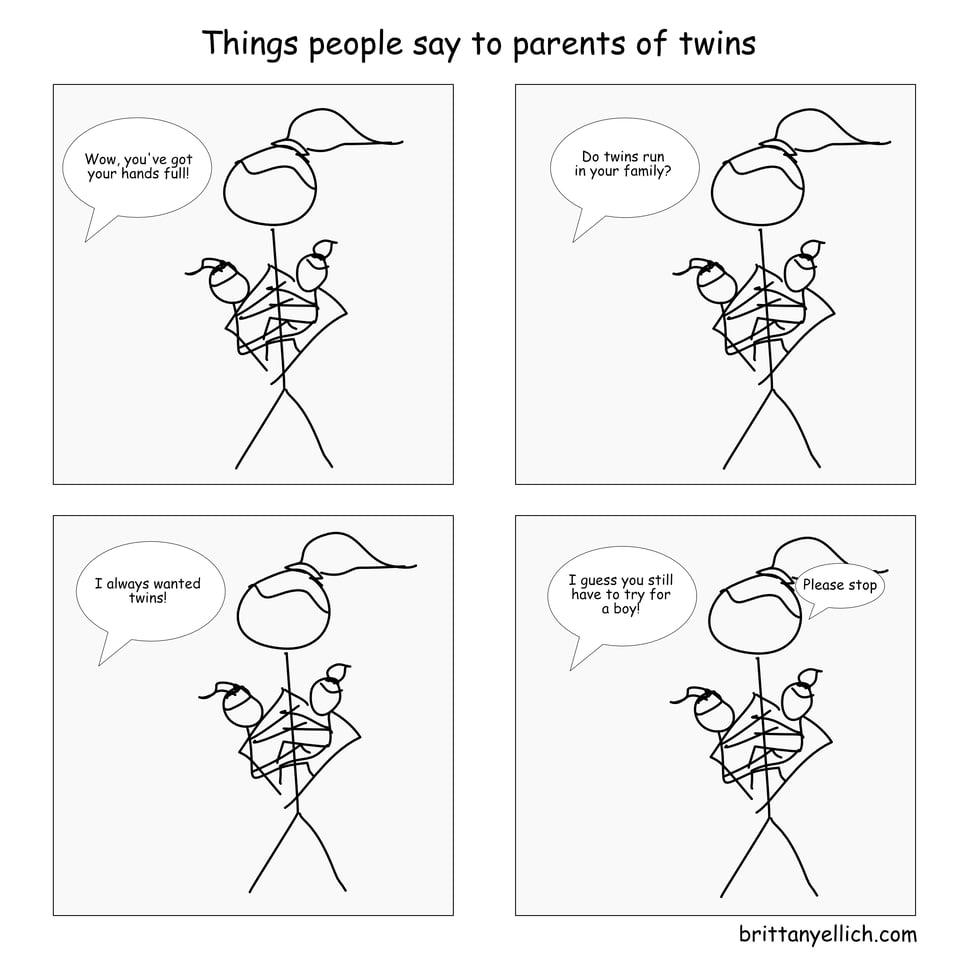

Here’s a silly web comic I made this week:

Have comments or questions about this newsletter? Or just want to be internet friends? Send me an email at brittany@balancedengineer.com or reach out on Bluesky or LinkedIn.