Concurrency in Distributed Systems

This week, I'm diving into concurrency challenges in distributed systems and how to tackle them!

March Theme: Distributed Systems

This month we are discussing distributed systems, and this week we are focusing on concurrency-or the ability to do things at the same time.

What is concurrency?

Concurrency refers to multiple computations happening within overlapping time periods. In distributed systems, concurrency is inevitable because many processes are running on different machines, handling different tasks, often accessing the same data.

This is a bit different from the concept of parallelism, where many computations can be done at the same time.

Think of it this way: parallelism is like adding more checkout lanes at a grocery store, while concurrency is like having a single cashier efficiently handle multiple customers who are at different stages of their checkout experience.

Challenges of Concurrency

There are a few common issues that come up with concurrency, each of which can be identified based on the pattern of bugs that arise.

1. Race Conditions

When multiple processes access and manipulate the same data concurrently, the outcome can depend on the timing of the operations, leading to unpredictable results.

Process 1: Read balance = $100, add $50

Process 2: Read balance = $100, subtract $25

Correct result should be $125, but might end up as

$75 or $150 depending on execution order2. Deadlocks

Deadlocks occur when two or more processes are waiting for each other to release resources, resulting in none being able to proceed.

Process 1: Has Resource A, needs Resource B

Process 2: Has Resource B, needs Resource A

Result: Both processes wait forever3. Livelocks

Similar to deadlocks, livelocks occur when processes continuously change their state in response to each other without making progress.

4. Starvation

Some processes may be indefinitely denied the resources needed to do their work.

Concurrency Control Mechanisms

To control for concurrency-related bugs, there are a few methods.

1. Locking Strategies

Locks (also often called mutexes) prevent multiple processes from accessing the same resource simultaneously. There are a few different types of locking strategies:

- Pessimistic locking: Lock resources before access, preventing others from accessing until the action is complete

- Optimistic locking: Allow access, but verify no changes occurred before committing through a hash or version number on the item

2. Atomic Operations

An atomic operation is one that is completed as a single unit of work, ensuring that the operation executes without interruption that could cause an inaccurate result:

Non-atomic (problematic):

read_balance()

increment_balance()

write_balance()

Atomic (safe):

atomic_increment_balance()3. Transaction Isolation Levels

Database systems offer various isolation levels:

- Read Uncommitted: Lowest isolation, allows dirty reads where one transaction can read data from other transactions that aren’t committed yet

- Read Committed: Guarantees that any data that is read is committed as soon as it is read, avoiding dirty reads

- Repeatable Read: This is the most restrictive isolation level, holding read locks on every read and write locks on every write/update operation

- Serializable: Highest isolation level, completes each concurrent operations serially

4. Consensus Algorithms

For distributed agreement when multiple nodes must coordinate:

- Paxos: Classic consensus algorithm for reaching agreement among all computers in a distributed system, even if some of the nodes fail

- Raft: Another consensus algorithm but designed to be easier to understand than Paxos

- Zab: Powers Apache ZooKeeper's coordination

Real-World Application Examples

1. Distributed Databases

Google's Spanner and CockroachDB use sophisticated timestamp ordering and distributed transactions to manage concurrency.

2. Message Queues

Apache Kafka and RabbitMQ help decouple systems and handle concurrent processing of events through message queues.

3. Collaborative Software

Google Docs uses Operational Transformation (OT) and Conflict-free Replicated Data Types (CRDTs) to manage concurrent edits.

4. Financial Systems

ACID transactions and distributed sagas help maintain consistency in concurrent financial operations.

Final Thoughts

Concurrency is one of those topics where simplicity is your friend. Complex concurrency strategies often introduce bugs that are notoriously difficult to reproduce and fix. Start with higher-level abstractions when possible, and move to lower-level mechanisms only when necessary.

Next week, we'll dive into fault tolerance – because in distributed systems, it's not a question of if components will fail, but when.

The Deep Dive

One truly excellent resource related to concurrency is Database Internals by Alex Petrov. It has deep insights into how databases handle concurrent operations.

Thank you!

If you made it this far, then thank you! This month is a bit of a doozy for me in terms of things going on, so I’m trying to stick to some simple concepts that I have a lot of notes on to keep hitting my goal of releasing one newsletter per week.

I have a lot of very fun things coming out soon that I can’t wait to share!

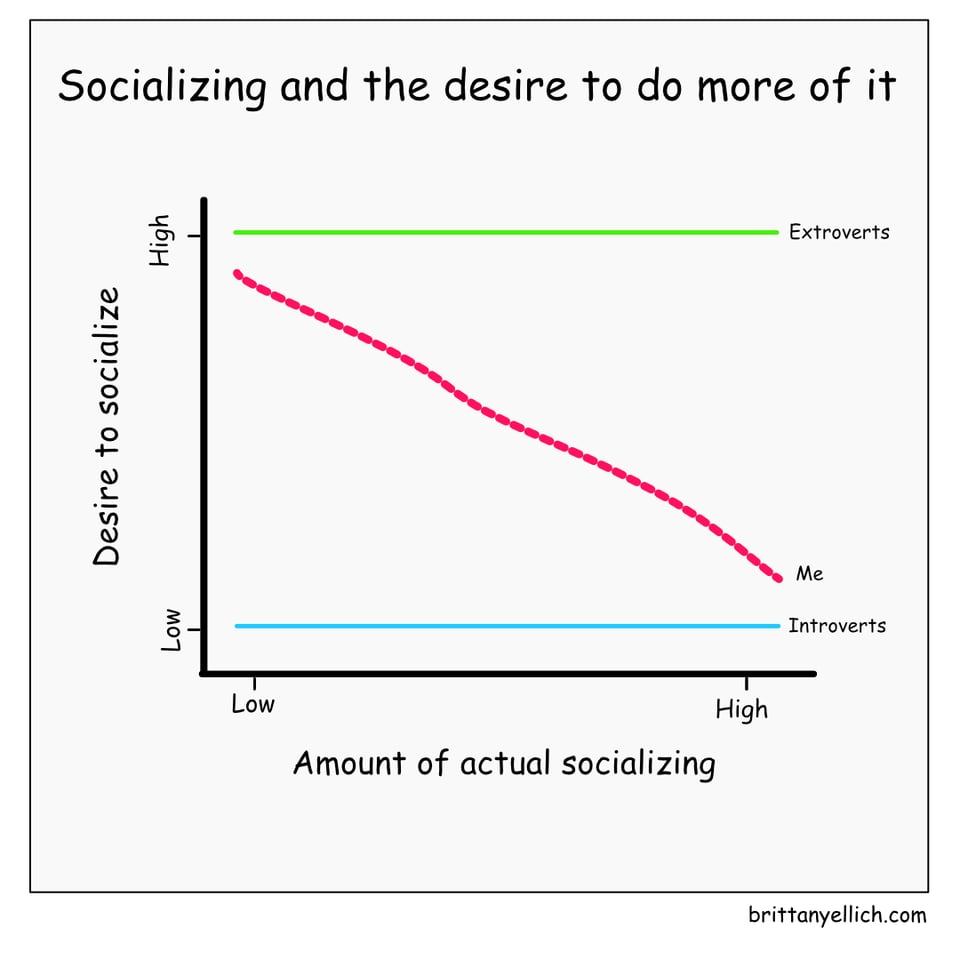

Here’s a silly web comic I made this week:

Add a comment: