Artificial Intelligence: Debugging

We celebrate 300 subscribers, explore AI examples for debugging, and share some new content for the week!

I'm excited to share that we've hit another milestone - 300 subscribers! It seems like just yesterday I was celebrating 100. I'm not super into marketing so am cooling my jets on sharing the numbers all the time, but it has been a fun journey so far, and seeing that subscriber number climb is very motivating!

Thank you for continuing this journey with me as we explore the balance in software engineering!

Highlights

Things I've been doing on the internet

Here are some things I've made on the internet this week! It has been a busy week at work but I managed to get a few things done!

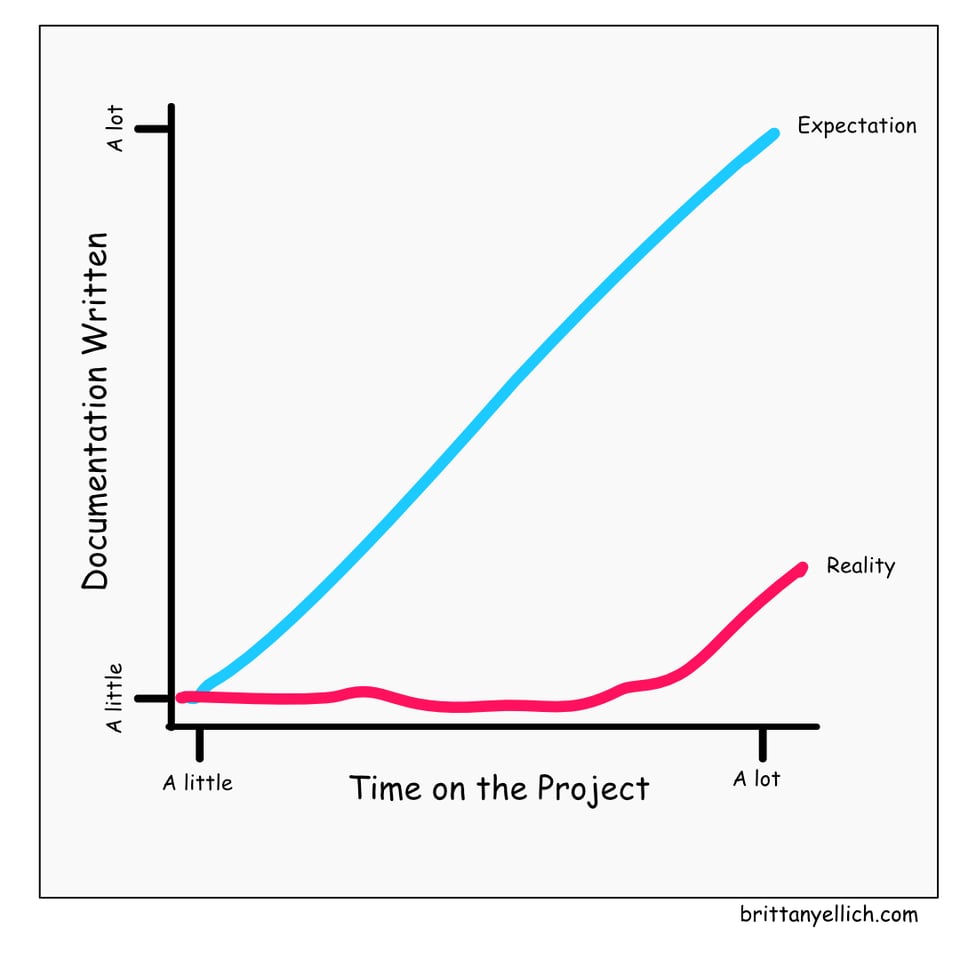

- Article: Documentation done right: A developer's guide

- I got another post on the GitHub Blog!

- Podcast: Overcommitted Ep. 7 | Decision Making

- Illustration:

Things I've enjoyed on the internet this week

It has been a busy week, so I haven't been doing a ton! I wanted to get out of the software space a bit and listen to something totally different, which is exactly what I did! Got something you want me to read and feature in this newsletter? Send it to me at brittany@balancedengineer.com!

- Book: Cosmos by Carl Sagan

- This book is great if you want to start your evening with a dose of existential dread about the vastness of the universe! It's also just really cool to read :)

Onto the content!

This month, we're diving into artificial intelligence and how it can enhance your workflow as a software engineer. We started with a primer on what AI actually is, and now we're exploring specific use cases. Last week we talked about how I use it in my brainstorming process, and today, we're focusing on one of my personal favorites: using AI for debugging.

May Theme: Artificial Intelligence

Let's face it - debugging is often where we spend the bulk of our development time. Those moments when you're staring at an error message that might as well be written in hieroglyphics, or when you've been looking at the same function for so long that you can't see the obvious mistake anymore. That's where AI tools can be game-changers. I end up needing to debug both my writing process and my development process, so let's chat about each!

How I Use LLMs for Debugging Writing

Writing clearly is a crucial skill for software engineers, whether it's documentation, emails, or (ahem) newsletters. But sometimes our writing doesn't quite communicate what we intend. Here's how I use AI to debug my writing:

Finding Clarity in Confusion

When I've written something technical that feels unclear or overly complex, I use this prompt:

I've written this explanation of [topic], but I'm worried it might be too complex for my audience of [audience type]. Could you help me identify any parts that might be confusing and suggest clearer alternatives?

[Paste your text here]

The AI will often spot jargon I didn't realize was jargon, or places where I've made logical leaps that might leave readers behind.

Spotting Inconsistencies

For longer pieces like documentation or technical specs, I use:

Review this document for inconsistencies in terminology, approach, or logic. Flag any contradictions or places where I've used different terms for the same concept.

[Paste your document here]

This has saved me countless embarrassing moments where I referred to the same function by three different names throughout a document.

Checking Tone and Accessibility

When writing emails or communications that need to strike the right tone:

Does this email sound [professional/friendly/collaborative]? Is there anything that might be misinterpreted or could come across differently than I intend?

[Paste your email here]

The feedback has helped me catch places where I might sound unintentionally harsh or where my attempts at brevity could come across as abrupt.

How I Use LLMs for Debugging Development Tasks

This is where I spend the majority of my debugging time. I primarily use Copilot Chat for these situations, but there are also a few built-in functions that work really well for GitHub Copilot in VS Code, like /explain!

Let's break down a few scenarios:

When I'm Fixing Errors

Error messages can sometimes be cryptic, particularly with frameworks or libraries you're less familiar with. Here's my go-to prompt:

I'm getting this error in my [language/framework] application:

[Error message]

Here's the relevant code:

[Code snippet]

Can you explain what this error means in simple terms and suggest possible fixes?

The key is providing enough context, but not drowning the AI in your entire codebase. I focus on sharing:

- The exact error message

- The code that triggered it

- Any recent changes I made

- What I've already tried

This approach has helped me understand those dreaded "undefined is not a function" JavaScript errors or cryptic Ruby gem dependency conflicts much faster than googling each individual error.

When I Have No Clue What I'm Reading

We've all been there - dropped into a codebase with no documentation, trying to make sense of what's happening. My debugging prompt for these situations:

I'm trying to understand this code snippet and how it fits into the larger application. Can you explain what it's doing line by line, and what its purpose might be?

[Code snippet]

Additional context:

- This is part of a [type of application]

- It seems to interact with [other systems/components]

- I need to [make changes/fix a bug/add a feature] to this area

The AI can often identify patterns or architectural approaches that help me get oriented faster. It's like having a pair programmer who can quickly catch you up on what's happening.

When My Tests Keep Failing

This is one of my favorite use cases:

My test is failing with this error:

[Test failure output]

Here's my test code:

[Test code]

And here's the implementation I'm testing:

[Implementation code]

What might be causing the test to fail, and how could I fix it?

AI is particularly good at spotting when my test expectations don't match what my code is actually doing, or when I'm missing an edge case.

Tips for Effective AI Debugging

Through trial and error, I've learned a few things that make AI debugging more effective:

- Be specific about what you need help with - Vague questions get vague answers. "Why isn't this working?" will give you less helpful responses than "Why is this React component re-rendering when I change this state value?"

- Provide relevant context, not everything - The AI doesn't need your entire application, just the parts relevant to your problem.

- Tell the AI what you've already tried - This saves time and gets you more advanced suggestions.

- Ask for explanations, not just solutions - Understanding why the fix works helps you avoid similar bugs in the future.

- Use AI as a debugging partner, not a replacement for understanding - The goal is to learn and become more self-sufficient, not to develop a dependency.

Add a comment: